# Best Practices

Naming and architectural conventions that are considered best practice

# Field Conventions

General conventions about field creation, grouping, naming, etc.

# General Conventions

All Naming Conventions Are [RFC 2119](https://www.ietf.org/rfc/rfc2119.txt) and [RFC 6919](https://tools.ietf.org/html/rfc6919) compliant.

1. All field API names **MUST** be written in English, even when the label is in another language.

2. All field API names **MUST** be written in PascalCase.

3. Fields **SHOULD NOT** contain an underscore in the fields name, except where explicitly defined otherwise in these conventions.

4. Fields generally **MUST (but you probably won't)** contain a description.

5. In all cases where the entire purpose of the field is not evident by reading the name, the field **MUST** contain a description.

6. If the purpose of the field is ambiguous, the field **MUST** contain a help text. In cases where the purpose is clear, the help text **COULD** also be defined for clarity's sake.

7. Field API names should respect the following prefixes and suffixes.2 Prefixes and Suffixes **SHALL NOT** be prepended by an underscore.

Field Type

Prefix

Suffix

MasterDetail

`Ref`

Lookup

`Ref`

Formula

`Auto`

Rollup Summary

`Auto`

Filled by automation (APEX)1

`Trig`

Picklist or Multipicklist

`Pick`

Boolean

`Is` or `IsCan`3

*1 Workflows, Process Builders and Flows are not included in this logic because these automations either allow field name modifications with no error, or can be modified by an administrator. If fields are created for the sole purpose of being filled by automation (e.g. fields that will be used in roll-up summaries), a consultant **WOULD PROBABLY** use the Trig suffix anyway, to indicate that users cannot set the data themselves.*

*2 While norms for other field types were considered, e.g. to make sure number, currency and percentage fields were easily recognizable, they were discarded as being too restrictive for an admin. Fixing type mismatches in this case is easily solved by casting the value to the correct type using either `TEXT()` or `VALUE()` functions.*

*3`IsCan` replaces "Can", **e.g.** `CanActivateContract` becomes `IsCanActivateContract`. This is to enable searching for all checkboxes on a page with a single query.*

# Grouping fields

1. If the organization is home to multiple services, the field `API` name ***SHOULD*** be prepended with the name of the service that required the field, followed by an underscore.

- This ***MUST NOT*** be the case if there is only one service using the object.

2. If several services use the field, or the field was originally required by a service before being used by others: the field `API` name ***MUST (but you probably won't)*** be prepended with the name of the service that is responsible for the user story that lead to the field creation, followed by an underscore. The Description of the field ***MUST*** indicate which services use the field.1

3. In the case the field is use differently by different services, the Description of the field ***MUST*** contain a summary description of each use.

4. If a field is created to host a value for technical reasons, but is not or should not be displayed to the users, the `API` name ***MUST*** be prefixed with `TECH` and an underscore.

5. If more than 50 fields are created on an object, a consultant ***SHOULD*** consider using prefixes to group fields in the same manner as technical fields, in the format of `$Groupname` followed by an underscore.

## Examples

Object

Field type

Comment

Field Label

Field API Name

Field Description

Case

Lookup

Looks up to Account

Service Provider

ServiceProviderRef\_\_c

Links the case to the Service Provider who will conduct the task at the client's.

Account

Formula

Made for the Accounting department only

Solvability

Accounting\_SolvabilityAuto\_\_c

Calculates solvability based on revenue and expenses. Sensitive data, should not be shared.

Contact

Checkbox

Sponsored ?

IsSponsored\_\_c

Checked if the contact was sponsored into the program by another client.

*1 While modifying API names post-deployment is notoriously complicated, making sure that field are properly recognizable is better in the long term than avoiding a maintenance during a project. Such modifications **SHOULD** be taken into account while doing estimations.*

# Workflow Conventions

Naming and structural conventions related to workflows

# Workflow Triggers

**Workflow Rules (along with Process Builders) are now on a deprecation / End-of-Life plan. Existing Workflow Rules will continue to operate for the foreseeable future, but in the near future (Winter 23) Salesforce will begin to prevent creating new Workflow Rules. Establish a plan to migrate to Flows, and create any new automation using Flow Builder.**

*These naming convention best practices will remain in place for reference purposes so long as Workflow Rules may exist in a Salesforce org*

A workflow trigger ***MUST*** always be named after what Triggers the workflow, and not the actions. In all cases possible, the number of triggers per object ***SHOULD*** be limited - reading the existing trigger names allows using existing ones when possible. Knowing that all automations count towards Salesforce allotted CPU time per record, a consultant ***SHOULD*** consider how to limit the number of workflows in all cases.

1. All Workflow Triggers ***MUST*** contain a Bypass Rule check.

2. A Workflow Trigger ***SHALL*** always start by `WF`, followed by a number corresponding to the number of workflows on the triggering Object, followed by an underscore.

3. The Workflow Trigger name ***MUST*** try to explain in a concise manner what triggers the WF. Note that conciseness trumps clarity for this field.

4. All Workflows Trigger ***MUST*** have a description detailing how they are triggered.

5. Wherever possible, a Consultant ***SHOULD*** use operators over functions.

#### Examples

Object

WF Name

Description

WF Rule

Invoice

WF01\_WhenInvoicePaid

This WF triggers when the invoice Status is set to "Paid". Triggered from another automation.

This WF triggers every time the Status of the invoice is changed.

`!$User.BypassWF__c && ISCHANGED(Status__c)`

Contact

WF01\_C\_IfStreetBlank

This WF triggers on creation if the street is Blank

`!$User.BypassWF__c && ISBLANK(MailingStreet)`

# Workflow Field Updates

**Workflow Rules (along with Process Builders) are now on a deprecation / End-of-Life plan. Existing Workflow Rules will continue to operate for the foreseeable future, but in the near future (Winter 23) Salesforce will begin to prevent creating new Workflow Rules. Establish a plan to migrate to Flows, and create any new automation using Flow Builder.**

1. A Workflow Field Update ***MUST*** Start with `FU`, followed by a number corresponding to the number of field updates on the triggering Object.

2. A Workflow Field Update ***SHOULD*** contain the Object name, or an abbreviation thereof, in the Field Update Name.1

3. A Workflow Field Update ***MUST*** be named after the field that it updates, and then the values it sets, in the most concise manner possible.

4. The Description of a Workflow Field Update ***SHOULD*** give precise information on what the field is set to.

#### Examples

Object

FU Name

Description

Contact

FU01\_SetEmailOptOut

Sets the Email Opt Out checkbox to TRUE.

Invoice

FU02\_SetFinalBillingStreet

Calculates the billing street based on if the client is billed alone, via an Agency, or via a mother company. Part of three updates that handle this address.

Contact

FU03\_CalculateFinalAmount

Uses current Tax settings and information to set the final amount

*1 While Field Updates are segregated by Object when viewed through an IDE or through code, the UI offers no such ease of use. If this is not done, a consultant **WOULD PROBABLY** create list views for field updates per Object.*

# Workflow Email Alerts

Workflow Rules (along with Process Builders) are now on a deprecation / End-of-Life plan. Existing Workflow Rules will continue to operate for the foreseeable future, but in the near future (Winter 23) Salesforce will begin to prevent creating new Workflow Rules. Establish a plan to migrate to Flows, and create any new automation using Flow Builder. **Email Alerts are NOT part of the Workflow Rule deprecation plan- you can and should continue to configure and use Email Alerts. Flows can reference and execute these Email Alerts**

1. A Workflow Email Alert ***MUST*** Start with `EA`, followed by a number corresponding to the number of email alerts on the triggering Object.

2. A Workflow Email Alert ***SHOULD*** contain the Object name, or an abbreviation thereof, in the Field Update Name.

3. A Workflow Email Alert's Unique Name and Description ***SHOULD*** contain the exact same information, except where a longer description is absolutely necessary.1

4. A Workflow Email Alert ***SHOULD*** be named after the type of email it sends, or the reason the email is sent.

Note that declaratively, the Name of the template used to send the email is always shown by default in Email Alert lists.

#### Examples

Object

EA Name

Description

Invoice

EA01\_Inv\_SendFirstPaymentReminder

EA01\_Inv\_SendFirstPaymentReminder.

Invoice

EA02\_Inv\_SendSecondPaymentReminder

SendSecondPaymentReminder

Contact

EA03\_Con\_SendBirthdayEmail

EA03\_Con\_SendBirthdayEmail

*1 Email Alert's Unique Names are generated from the Description by default in Salesforce. As Email Alerts can only send emails, this convention describes a less exhaustive solution than could be, at the profit of speed while creating Email Alerts declaratively.*

# Workflow Tasks

Workflow Rules (along with Process Builders) are now on a deprecation / End-of-Life plan. Existing Workflow Rules will continue to operate for the foreseeable future, but in the near future (Winter 23) Salesforce will begin to prevent creating new Workflow Rules. Establish a plan to migrate to Flows, and create any new automation using Flow Builder.

1. A Workflow Task Unique Name ***MUST*** Start with `TSK`, followed by a number corresponding to the number of tasks on the triggering Object.

2. A Workflow Task Unique Name ***COULD*** contain the Object name, or an abbreviation thereof, in the Field Update Name. This is to avoid different conventions for Workflow Actions in general.

Most information about tasks are displayed by default declaratively, and creating a task should rarely impact internal processes or external processes in such a manner that urgent debugging is required. As Users will in all cases never see the Unique Name of a Workflow Task, it is not needed nor recommended to norm them more than necessary.

# Workflow Outbound Messages

Workflow Rules (along with Process Builders) are now on a deprecation / End-of-Life plan. Existing Workflow Rules will continue to operate for the foreseeable future, but in the near future (Winter 23) Salesforce will begin to prevent creating new Workflow Rules. Establish a plan to migrate to Flows, and create any new automation using Flow Builder. **Outbound Messages are NOT part of the Workflow Rule deprecation plan- you can and should continue to configure and use Outbound Messages when appropriate. Flows can reference and execute these Outbound Messages**

1. An Outbound Message Name ***MUST*** Start with `OM`, followed by a number corresponding to the number of outbound messages on the triggering Object.

2. An Outbound Message Name ***COULD*** contain the Object name, or an abbreviation thereof, in the Field Update Name. This is to avoid different conventions for Workflow Actions in general.

3. An Outbound Message ***MUST*** be named after the Service that it send information to, and then information it sends in the most concise manner possible.

4. The Description of An Outbound Message ***SHOULD*** give precise information on why the Outbound Message is created.

5. Listing the fields sent by the Outbound Message is ***NOT RECOMMENDED***.

#### Examples

Object

EA Name

Description

Invoice

OM01\_Inv\_SendBasicInfo

Send the invoice header to the client software.

Invoice

OM02\_Inv\_SendStatusPaid

Sends a flag that the invoice was paid to the client software.

Contact

OM01\_SendContactInfo

Sends most contact information to the internal Directory.

# Validation Rule Conventions

Conventions about validation rules, naming, creation, etc

# Validation Rule Metadata Conventions

1. The Validation Rule Name ***MUST*** try to explain in a concise manner what the validation rule prevents. Note that conciseness trumps clarity for this field.

2. All validation Rules `API` names ***MUST*** be written in [PascalCase](https://en.wikipedia.org/wiki/PascalCase).

3. Validation Rules ***SHOULD NOT*** contain an underscore in the fields name, except where explicitly defined otherwise in these conventions.

4. A Validation Rule ***SHALL*** always start by a shorthand of the object name (example: `ACC`, then the string `VR`, followed by a number corresponding to the number of validation rules on the triggering Object, followed by an underscore.

5. The Validation Rule Error Message ***MUST*** contain an error code indicating the number of the Validation Rule, in the format `[VRXX]`, `XX` being the Validation Rule Number.1

6. Validation Rules ***MUST*** have a description, where the description details the Business Use Case that is addressed by the VR. A Description ***SHALL NOT*** contain technical descriptions of what triggers the VR - the Validation Rule itself ***SHOULD*** be written in such a manner as to be clearly readable.

*1 While including an error code in a user displayed message may be seen as strange, this will allow any admin or consultant to find exactly which validation rule is causing problems when user need only communicate the end code for debugging purposes.*

# Validation rules writing conventions

1. All Validation Rules MUST contain a Bypass2 Rule check.

2. Wherever possible, a Consultant ***SHOULD*** use operators over functions.

3. All possible instances of `IF()` ***SHOULD*** be replaced by `CASE()`

4. Referencing other formula fields should be avoided at all cost.

5. In all instances, `ISBLANK()` should be used instead of `ISNULL`, as per [this link](https://help.salesforce.com/apex/HTViewHelpDoc?id=customize_functions.htm&language=en#ISBLANKDef).

6. Validation Rules ***MUST NOT*** be triggered in a cascading manner.1

### Examples

The status cannot advance further if it is not approved. \[OPP\_VR02\]

The status cannot advance further if it is not approved. \[OPP\_VR02\]

1 Cascading Validation Rules are defined as VRs that trigger when another VR is triggered. Example: A field is mandatory if the status is Lost, but the field cannot contain less than 5 characters. Doing two validation rules which would trigger one another would result in a user first seeing that the field is mandatory, then saving again, and being presented with the second error. In this case, the second error should be displayed as soon as the first criteria is met.

2 See [main Bypasses page](https://wiki.sfxd.org/books/best-practices/page/bypasses) for more info on the topic

# Bypasses

We reference "bypasses" in a number of these conventions.

Bypasses are administrator-defined checkboxes that allow you to massively deactivate automations and validation rules with a simple click. They avoid that awkward feeling when you realize that you need to turn them off one by one so you can do that huge data update operation you're planning.

If your validation rules bypasses look like this, this is bad: $Profile.id <> '00eo0000000KXdC' && somefield__c = 'greatvalue'

The most maintainable way to create a bypass is to create a hierarchical custom setting to store all the bypass values that you will use. This means that the custom setting should be named "Bypasses", and contain one field per bypass type that you want to do.

Great-looking bypass setup right there

This setup allows you to create bypasses either by profile or by user, like so:

This allows you to reference bypasses directly in your validation rules by simply letting Salesforce handle knowing whether or not the bypass is active or not for that specific user or profile. In the validations themselves, using this bypass is as easy as referencing it via the formula builder. An example for validation rules could be:

!$Setup.Bypasses__c.IsBypassVR__c && Name = "test"

As you can also see in the above screenshots, you can use a single custom setting to host all the bypasses for your organization, including Validation Rules, Workflows, Triggers, etc. As additional examples, you can see that "Bypass Emails" and "Bypass Invoicing Process" are also present in the custom setting - adding the check for these checkboxes in the automations that trigger emails, and automations that belong to Invoicing respectively, allow partial deactivation of automations based on criteria.

# Data Migration Best Practices

An attempt to help you not delete your production database

# 1 - Data Migrations Checklist

The following is a semi-profanity-ridden attempt at explaining one way to do data migrations while following best practices. It is rather long and laced with colorful language. If you have read it already, or if you want to avoid the profanity, you can consult the following checklist in the *beautiful* table below.

Note that all elements are considered mandatory.

As a quick note, and a reminder even if you've read the whole version:

DO NOT MODIFY SOURCE DATA FILES, EVER.

If you're doing data migrations, either use a script to modify the source files and save the edited version, or use excel workbooks that open the source file and then save the edited result elsewhere. Yes, even if the source is an excel file.

Why? Because sources change. People forget stuff, files aren't well formatted, shit gets broken, and people are human - meaning that one-time data import is actually going to be done multiple times. Edit the source file, and get to do everything all over again. Use scripts or workbooks to do the transformations ? Point that to the new source file and BAM Bob's your uncle.

Scripts you might want to use:

- [OpenRefine](http://openrefine.org/)

- [SFXD's PSCSV](https://github.com/SFXD/PSCSV)

- Salesforce's official [Data Migration Tool](https://github.com/forcedotcom/Data-Migration-Tool) for cross-org data loading

- [Amaxa](https://gitlab.com/davidmreed/amaxa) for related objects, done by David Reed

Or, if you prefer excel, open a blank workbook, Import the source file via the "data" ribbon tab, select "from text/csv" (or whatever matches based on your source type), then save it as both:

- the construction excel,

- a NEW csv file after doing your changes in formula columns.

That way when you change the source file you can just open the construction book again and resave.

Action

Completed?

DO YOU HAVE A BACKUP

Is it UTF-8 encoded

Did you check it is readable and well formatted

Does it have carriage returns stored as carriage returns, not as spaces

Is it up to date

Do you have a mapping for every object and field

Did you determine an ExternalID for each object

Did you determine source of truth (whether to overwrite or not) for reach field

Did the client sign off on the mapping

Do you have the source data

Is it in a format your tool can read

Are dates and date-times well formatted (yyyy-mm-dd || yyyy-mm-ddT00:00:00z) and are times exported in UTC

Are field lengths respected (emails not longer than 80 chars, Names not longer than 40, etc)

Do numbers have the right separators

Do all tables have the required data for loading (Account Name, Contact Last Name, etc etc etc)

Do all fields that have special characters or carriage returns have leading and trailing qualifiers (")

Do all records have an external Id

Did you do a dummy load with only one field mapped to make your sure tool can read the entire file

Are you doing transformations

Did you document them all

Did you automate them so you can run them again with a click

Did you read the LDV guide if you are loading more than 1M records

Did you activate validation rules bypass

Did you check all automations to deactivate any that should be, including email alerts

Did you warn the client about when you would do the data load

Did you warn the client about how long the data load would take

--------- run the migration -----------

Did you reactivate all automations

Did you remove validation rule bypass

Did you tell the client you were done and they could check

Did you check the quality of the data

# 2 - Data Migration Step-by-step - Before Loading

### Introduction

You're going to have to map data from various sources into Salesforce. **IT'S THAT BIG MIGRATION TIME.**

Well let's make sure you don't have to do it again in two days because data is missing, or delete production data.

Salesforce does not back up your data.

If you delete your data, and the amount deleted is bigger than what is in the recycle bin, if will be deleted forever. You could try restoring it via Workbench, praying that the automated Salesforce jobs haven't wiped your data yet.

If you update data, the moment the update hits the database (the DML) is done, the old data is lost. Forever.

If you don't have a backup, you could try seeing if you turned on field history.

If worst comes to worst you can pay 10 000€ (not joking, see [here](https://help.salesforce.com/apex/HTViewSolution?id=000003594)) to Salesforce to restore your data. Did I mention that Salesforce would give you a CSV extract of the data you had in Salesforce ? Yeah they don't restore the org for you. You'd still need to restore it table per table with a data loading tool.

But let's try to avoid these situations, by following these steps. These steps apply to any massive data load, but especially in case of deletions.

### GENERAL DATA OPERATIONS STUFF

#### Tools

Do not use Data Loader if you can avoid it. If you tried doing a full data migration with Dataloader, you will not be helped. By this I mean I will laugh at you and go back to drinking coffee. Dataloader is a BAD tool.

[Amaxa](https://gitlab.com/davidmreed/amaxa) is awesome and handles objects that are related to one another. It's free and awesome.

[Jitterbit](https://www.jitterbit.com/solutions/salesforce-integration/salesforce-data-loader/) is like Dataloader but better. It's free. It's getting old though, and some of the newer stuff won't work like Time fields.

[Talend](https://www.talend.com/) requires some tinkering but knowing it will allow you to migrate from almost anything, to almost anything.

Hell you can even use SFDX to do data migrations.

But yeah don't use dataloader. Even Dataloader.io is better, and that's a paid solution. Yes I would recommend you literally pay rather than use Dataloader.

If you MUST use dataloader, EXPORT THE MAPPINGS YOU ARE DOING. You can find how to do so in the data loader user guide: https://developer.salesforce.com/docs/atlas.en-us.dataLoader.meta/dataLoader/data\_loader.htm

Even if you think you will do a data load only once, the reality is you will do it multiple times. Plus, for documentation, having the mapping file is best practice anyway. Always export the mapping, or make sure it is reusable without rebuilding it, whatever the tool you use.

#####

##### Volume

If you are loading a big amount of data or the org is mature, read [this document](https://resources.docs.salesforce.com/sfdc/pdf/salesforce_large_data_volumes_bp.pdf) entirely before doing anything. LDV starts at a few million records in general, or several gigabytes of data. Even if you don't need this right now, reading it should be best practice in general.

Yes, read the whole thing. The success of the project depends on it, and the document is quite short.

#### Deletions

If you delete data in prod without a backup, this is bad.

If the data backup was not checked, this is bad.

If you did not check automations before deleting, this is also bad.

Seriously, before deleting ANYTHING, EVER:

- get backup

- check automations

- check backup is valid.

####

#### Data Mapping

For Admins or Consultants: you should avoid mapping the data yourself. Any data mapping you do should be with someone from the end-user's who can understand you are saying. If no one like this is available, spend time with a business operative so you can do the mapping and make them sign off on it.

The client signing off on the mapping is drastically important, as this will impact the success of the data load, AND what happens if you do not successfully load it - or if the client realizes they forgot something.

Basic operations for a data mapping are as follow:

- study Source and target data model

- establish mapping from table to table, field to field, or both if necessary.

- for each table and field, establish Source of Truth, meaning which data should take priority if conflicts exist

- establish an ExternalId from all systems to ensure data mapping is correct

- define which users can see what data. Update permissions if needed.

#### Data retrieval

Data needs to be extracted from source system. This can be via API, an ETL, a simple CSV extract, etc. Note that in general it is better if storing data as CSV can be avoided - ideally you should do a point-to-point load which simply transforms the data - but as most clients can only extract csv, the following best practices apply:

- Verify Data Format

- Date format yyyy-mm-dd

- DateTime format yyyy-mm-ddT00:00:00z

- Emails not longer than 80 char

- Text containing carriage returns is qualified by "

- Other field-specific verifications re. length and separators for text, numbers, etc.

- Verify Table integrity

- Check that all tables have basic data for records:

- LastName, Account for Contact

- Name for Account

- Any other system mandatory fields

- Check that all records have the agreed-upon external Ids

- Verify Parsing

- Do a dummy load to ensure that the full data extracted can be mapped and parsed by the selected automation tool

#### Data Matching

You should already have created External Ids on every table, if you are upserting data.

If not, do so now.

DO NOT match the data in excel.

Yes, INDEX(MATCH()) is a beautiful tool. No, no one wants you to spend hours doing that when you could be doing other stuff, like drinking a cold beer.

If you're using VLOOKUP() in Excel, stop. Read up on how to use INDEX(MATCH()). You will save time, the results will be better, and you will thank yourself later. Only thing to remember is to always add "0" as a third parameter to "MATCH" so it forces exact results.

Store IDs of the external system in the target tables, in the ExternalId field. Then use that when recreating lookup relationships to find the records.

This saves time, avoids you doing a wrong matching, and best of all, if the source data changes, you can just run the data load operation again on the new file, without spending hours matching IDs.

# 3 - Data Migration Step-by-step - Loading

#### FIRST STEPS

1. Login to Prod. Is there a weekly backup running, encoded as UTF-8, in Setup > Data Export

- Nope

Select encoding UTF-8 and click "Export Now". This will take hours.

Turn that weekly stuff on.

Make sure the client KNOWS it's on.

Make sure they have a strategy for downloading the ZIP file that is generated by the extract weekly.

- Yup

- Is it UTF-8 and has run in the last 48 hours ?

- Yup

Confer with the client to see if additional backup files are needed. Otherwise, you're good.

- Nope

If the export isn't UTF-8, it's worthless.

If it's more than 48h old, confer with the client to see if additional backup files are needed. In all cases, you should consider doing a new, manual export.

SERIOUSLY MAKE SURE YOU CHANGE THE ENCODING. Salesforce has some dumb rule of not defaulting to UTF-8. YOU NEED UTF-8. Accents and ḍîáꞓȑîȶîꞓs exist. Turns out people like accents and non-roman alphabets, who knew?

- If Data Export is not an option because it has run too recently, or because the encoding was wrong, you can also do your export by using whatever too you want to Query all the relevant tables. Remember to set UTF-8 as the encoding on both export and import.

2. Check the org code and automation

- Seriously, look over all triggers that can fire when you upload the data.

You don't want to be that consultant that sent a notification email to 50000 people.

Just check the triggers, WFs, PBs, and see what they do.

If you can't read triggers, ask a dev to help you.

Yes, Check the Workflows and Process Builders too. They can send Emails as well.

- Check Process Builders again. Are there a lot that are firing on an object you are loading ? Make note of that for later, you may have to deactivate them.

3. Check data volume.

- Is there enough space in the org to accommodate the extra data ? (this should be pre-project checks, but check it again)

- Are volumes to load enough to cause problems API-call wise ?

If so, you may need to consider using the BULK jobs instead of normal operations

- In case data volumes are REALLY big, you will need to abide by LDV (large data volume) best practices, including not doing upserts, defering sharing calculations, and grouping records by Parent record and owner before uploading. Full list of these is available in the pdf linked above and [here](https://resources.docs.salesforce.com/sfdc/pdf/salesforce_large_data_volumes_bp.pdf).

#### PREPARING THE JOBS

Before creating a job, ask yourself which job type is best.

Upsert is great but is very resource intensive, and is more prone to RECORD\_LOCK than other operation types. It also takes longer to complete.

Maybe think about using the BULK Api.

In all cases, study what operation you do and make sure it is the right one.

Once that is done...

You are able to create insert, upsert, query and deletion jobs, and change select parts of it. That's because you are using a real data loading tool.

This is important because this means you can:

- Create a new Sandbox

- In whatever tool you're using, create the operations you will do, and name them so you know in which order you need to trigger them.

- Prepare each job, point them to a sandbox.

- Do a dummy load in sandbox. Make sure to set the start line to something near the end so you don't clog the sandbox up with all the data.

- Make sure everything looks fine.

If something fails, you correct the TRANSFORMATION, not the file, except in cases where it would be prohibitively long to do so. Meaning if you have to redo the load, you can run the same scripts you did before to have a nice CSV to upload.

#### GETTING READY TO DO THAT DATA OPERATION

This may sound stupid but warn your client, the PM, the end users that you're doing a data load. There's nothing worse than losing data or seeing stuff change without knowing why. Make sure key stakeholders are aware of the operation, the start time, and the estimated end time. Plus, you need them to check the data afterwards to ensure it's fine.

You've got backups of every single table in the Production org.

Even if you KNOW you do, you open the backups and check they are not corrupt or unreadable. Untested backups are no backups.

You know what all automations are going to do if you leave them on.

You talked with the client about possible impacts, and the client is ready to check the data after you finish your operations.

You set up, with the client, a timeframe in which to do the data operation.

If the data operation impacts tables that users work on normally, you freeze all those users during that timeframe.

Remember to deactivate any PB, WF, APEX that can impact the migration. You didn't study them just to forget them.

If this is an LDV job, take into account any considerations listed above.

#### DATA OPERATION

1. Go to your tool and edit the Sandbox jobs.

2. Edit the job Login to point to production

3. Save all the jobs.

4. You run, in order, the jobs you prepared.

When the number of failures is low enough, study the failure files, take any corrective action necessary, then use those files as a new source for a new data load operation.

Continue this loop until the number of rejects is tolerable.

This will ensure that if some reason you need to redo the entire operation, you can take the same steps in a much easier fashion.

Once you are done, take the failure files, study them, and prepare a recap email detailing failures and why they failed. It's their data, they have a right to know.

#### POST-MIGRATION

- Make sure everything looks fine, that you carried everything over.

- Warn their PM that the migration is done and request testing from their side.

- If you deactivated Workflows or PBs or something so the migration passes, ACTIVATE THEM BACK AGAIN.

- Unfreeze users if needed.

Go drink champagne.

####

#### IF SHIT DOESN'T LOOK RIGHT

You have a backup. Don't panic.

- Identify WTF is causing data to be wrong.

- Fix that.

- Get your backup, restore data to where it was before the fuckup. Ideally, only restore affected fields. If needed, restore everything.

- Redo the data load if needed.

# Getting the right (number of) Admins

Salesforce Success Services

Achieve Outstanding CRM Administration

Because Salesforce takes care of many traditional administration tasks, system administration is easier than ever before. Setting up, customizing the application, training users, and “turning on” the new features that become available with each release—all are just a few clicks away. The person responsible for these tasks is your Salesforce CRM administrator. Because this person is one of the most important resources in making your implementation a success, it’s important to carefully choose your administrator and to continually invest in his or her professional development. You can also choose to have Salesforce handle administrator tasks for you.

Note: Larger enterprise implementations often use a role called Business Analyst or Business Application Manager as well, particularly for planning the implementation and ensuring adoption once the solution is live. Although the most common customization tasks don’t require coding, you may want to consider using a professional developer for some custom development tasks, such as writing Force.com code (Apex), developing custom user interfaces with Force.com pages (Visualforce), or completing complex integration or data migration tasks.

In many ways, the administrator fills the role played by traditional IT departments: answering user questions, working with key stakeholders to determine requirements, customizing the application to appeal to users, setting up reporting and dashboards to keep managers happy, keeping an eye on availability and performance, activating the features in new releases, and much more. This paper will help you to make important choices when it comes to administering your Salesforce CRM application, including:

Finding the right person(s)

Investing in your administrator(s)

Providing adequate staffing

Getting help from Salesforce

Find the right administrator

Who would make an ideal Salesforce CRM administrator? Experience shows that successful administrators can come from a variety of backgrounds, including sales, sales operations, marketing, support, channel management, and IT. A technical background may be helpful, but is not necessary. What matters most is that your administrator is thoroughly familiar with the customization capabilities of the application and responsive to your users. Here are some qualities to look for in an administrator:

A solid understanding of your business processes

Knowledge of the organizational structure and culture to help build relationships with key groups

Excellent communication, motivational, and presentation skills

The desire to be the voice of the user in communicating with management

Analytical skills to respond to requested changes and identify customizations

Invest in your administrator

Investing in your administrator will do wonders for your Salesforce CRM solution. With an administrator who is thoroughly familiar with Salesforce CRM, you’ll ensure that your data is safe, your users are productive, and you get the most from your solution.

Salesforce offers both self-paced training and classroom training for administrators. For a list of free, self-paced courses, go to Salesforce Training & Certification. To ensure that your administrator is fully trained on all aspects of security, user management, data management, and the latest Salesforce CRM features, enrol your administrator in Administration Essentials (ADM201). The price of this course includes the cost of the certification that qualifies your administrators to become Salesforce.com Certified Administrators. For experienced administrators, Salesforce offers the Administration Essentials for Experienced Admins (ADM211) course.

Providing adequate staffing

The number of administrators (and, optionally, business analysts) required depends on the size of your business, the complexity of your implementation, the volume of user requests, and so on. One common approach for estimating the number of administrators you need is based on the number of users.

**Number of users**

**Administration resources**

1 – 30 users

< 1 full-time administrator

31 – 74 users

1+ full-time administrator

75 – 149 users

1 senior administrator; 1 junior administrator

140 – 499 users

1 business analyst, 2–4 administrators

500 – 750 users

1–2 business analysts, 2–4 administrators

> 750 users

Depends on a variety of factors

In addition to the user base, also consider the points below:

In small businesses, the role of the administrator is not necessarily a full-time position. In the initial stages of the implementation, the role requires more concentrated time (about 50 percent). After go-live, managing Salesforce CRM day to day requires much less time (about 10–25 percent)

If you have several business units that use Salesforce CRM solutions—such as sales, marketing, support, professional services, and so on—consider using separate administrators for each group, to spend between 50–100 percent of their time supporting their solutions.

Another common practice for large implementations is to use “delegated administrators” for specific tasks such as managing users, managing custom objects, or building reports.

If you operate in multiple geographic regions, consider using one administrator for each major region, such as North America, EMEA, and APAC. To decide how to classify regions, consider whether they have a distinct currency, language, business processes, and so on, and train your administrators in the multicurrency and multilanguage features. Also appoint a lead analyst or administrator who will coordinate the various regions.

If you need customization beyond the metadata (click not code) capabilities of Salesforce CRM or want to develop new applications, you may also need a developer to create, test, and implement custom code.

[https://help.salesforce.com/HTViewSolution?id=000007548](https://help.salesforce.com/HTViewSolution?id=000007548)

# Big Objects

Big objects are Salesforce's take on NoSQL (although it works just like common SQL). It allows large data storage on Salesforce's servers. Ideal for Big Data and compliance scenarios.

# Sample scenario - store all field changes for an object

In this scenario, the customer wants, for whatever reason, to track changes of all the fields in a single record. Salesforce provides the default field tracking, but it is available for only twenty fields per object. If this object we are talking about has more, then it is impossible to solve it with the standard, declarative tools.

Big objects are the ideal candidate for this, because we are talking about data that users probably don't need to report (big objects do not support reporting), there's a change that it is a lot of data (if the record is changed frequently), and possibly there's a legal reason for keeping those changes stored (compliance).

So to do that we'll need a trigger on the object, running preferably on the `after update` trigger event. At this point the record is already saved but the transaction is not yet committed to the database, so the changes were made and we get the difference using `Trigger.oldMap` to get the old version of the changed records.

After iterating through all the fields on the object, we check for differences, and for each one we instantiate a new big object. When the iteration ends we insert them immediately (using `Database.insertImmediate()`).

In this configuration, the big object's index would be the related record's Id, the field that was modified and the date/time stamp of the change (depending on requirements, one might want to spend some time thinking if it is best to have the timestamp before the field name). This way, if we wanted to display the data in a Lightning Component, for example, we could query the specific record data synchronously in Apex because of the indices created:

```SQL

SELECT

RecordId__c,

Field__c,

Timestamp__c,

OldValue__c,

NewValue__c

FROM ObjectHistory__b

WHERE RecordId__c = :theRecordId

```

# Mass Update Access to Objects And Fields For Profiles And Permission Sets

If you need to update Object-level permissions (CRED) or Field level Permissions (FLS) for a large number of Objects, Fields, Profiles, or Permission Sets, rather than manually clicking dozens of checkboxes on multiple pages, it is sometimes easier and faster to make those updates using tools like Data Loader.

This article describes how to make those updates, as well as relevant information about the data model regarding FLS and CRED. It is intended for declarative developers.

# Object Permissions - Basic Functionality

When dealing with Profiles and CRED there are three objects involved:

- **Profile** object

- **PermissionSet** object

- **ObjectPermissions** object

***Note:** Every Profile has a corresponding child PermissionSet record, as indicated by the ProfileId field on the PermissionSet record. When dealing with Permission Sets, the Profile object doesn’t factor in.*

For every combination of Profile and Object, there is a corresponding ObjectPermissions record with six boolean fields that control the access level for that Profile to that object. The same goes for Permission Sets. Those six fields are:

- `PermissionsCreate `

- `PermissionsDelete `

- `PermissionsEdit `

- `PermissionsRead `

- `PermissionsViewAllRecords `

- `PermissionsModifyAllRecords`

***Note:** If a Profile or Permission Set has no access to an object, then there is no ObjectPermissions record for that object/profile combination. You cannot have an ObjectPermissions record where all “permissions” fields are FALSE.*

In addition to these boolean fields, there are two other uneditable fields which indicate which object the record is related to (`sObjectType`), as well as the related Permission Set (`ParentId`). Remember, even if the ObjectPermissions record is controlling access for a Profile, it will be related to a Permission Set. That Permission Set will have the Id of the corresponding Profile in the `ProfileId` field.

When a Profile or Permission Set is granted access to an Object, Salesforce automatically creates a new ObjectPermissions record. When access to that Object is removed, Salesforce deletes that record.

# Field Permissions - Basic Functionality

Field-Level Security works very similarly to Object-Level Permissions. When dealing with Profiles and FLS, there are three objects involved:

***Note:** Every Profile has a corresponding child PermissionSet record, as indicated by the ProfileId field on the PermissionSet record. When dealing with Permission Sets, the Profile object doesn’t factor in.*

For every combination of Profile and Field, there is a corresponding FieldPermissions record. Each record has two boolean fields that control the access level for that Profile to that field. The same goes for Permission Sets. Those two fields are:

- `PermissionsEdit `

- `PermissionsRead `

***Note:** If a Profile or Permission Set has no access to a Field, then there is no FieldPermissions record for that Field/Profile combination. You cannot have a FieldPermissions record where all “permissions” fields are FALSE.*

In addition to these boolean fields, there are three other uneditable fields which indicate which Object the record is related to (`sObjectType`), which specific Field this record controls access to (`Field`), and the related Permission Set (`ParentId`). Remember, even if the FieldPermissions record is controlling access for a Profile, it will be related to a Permission Set. That Permission Set will have the Id of the corresponding Profile in the `ProfileId` field.

When a Profile or Permission Set is granted access to a Field, Salesforce automatically creates a new FieldPermissions record. When access to that Field is removed, Salesforce deletes that record.

# Query CRED And FLS Permissions - Examples

**Query All Permissions**

To get a list of every CRED setting for every Profile and Permission Set in Salesforce run the following query, or use Data Loader to export all ObjectPermissions records with the following fields:

```

`SELECT Id, ParentId, Parent.ProfileId, Parent.Profile.Name, SobjectType, PermissionsCreate, PermissionsDelete, PermissionsEdit, PermissionsRead, PermissionsViewAllRecords, PermissionsModifyAllRecords FROM ObjectPermissions`

```

To query all Field permissions use a similar query:

In order to limit your search to specific profiles, add a filter to the end using the `Parent.ProfileId` field . Example:

```

SELECT Id, (...) FROM (...) WHERE Parent.Profile.Name = 'Sales Manager'

```

Or if you have a list of profiles:

```

SELECT Id, (...) FROM (...) WHERE Parent.Profile.Name IN ('Sales Manager', 'Sales', 'Marketing')

```

**Query Permissions For Profiles Only (Or Permission Sets Only)**

To limit your query to only see permissions related to Profiles and not Permission Sets, add a filter to the end using the `Parent.ProfileId` field to make sure it’s not empty:

```

SELECT Id, (...) FROM (...) WHERE Parent.ProfileId != null

```

Conversely, to limit your query to only show permissions related to Permission Sets, adjust the filter:

```

WHERE Parent.ProfileId = null

```

**Query Permissions For Specific Objects**

In order to limit the Objects you want permissions for, add a filter to the end using the `SobjectType` field. Example:

```

SELECT Id, (...) FROM (...) WHERE SobjectType IN ('Account','Opportunity','Contact')

```

**Query Permissions For Specific Fields**

In order to limit the Fields you want permissions for, add a filter to the end using the `Field` field. Note that the values in the `Field` field include API name of the Object, followed by a period, then the API name of the Field. Example:

```

SELECT Id, (...) FROM (...) WHERE Field IN ('Account.Customer__c','Opportunity.Total__c','Contact.LastName')

```

# Updating, Deleting, and Adding Permissions

After running your query you will have a table describing access for all objects/fields **where at least one profile or permission set has some kind of access**. This is an important concept to understand. If no Profiles or Permission Sets have access to an Object or Field, there will not be a record for that object/field.

**Updating Permissions**

For existing ObjectPermissions/FieldPermissions records, you can make updates to the TRUE and FALSE values in each column, then use Data Loader to upload the changes using the **Update** feature.

**Removing Permissions**

To remove all access to an Object/Field, you will need to use the **Delete** feature in Data Loader to delete the appropriate ObjectPermissions/FieldPermissions records, using a list of Ids.

**Adding Permissions**

To add access where there is none, you will need to use the Insert feature in Data Loader to create new ObjectPermissions/FieldPermissions records.

**Necessary Fields**

**ObjectPermissions**

To data load ObjectPermissions records, include the following fields:

- Upserts are generally not recommended due to the extremely slow speed. It will most likely take much longer to make the upsert than it would to split the records into separate Insert and Update files.

- As stated above, you cannot have an ObjectPermissions or FieldPermissions record where all “permissions” fields are FALSE. If you try to update or insert one, you will get an error. Instead, to remove all access to an object, you have to delete the ObjectPermissions record.

- Custom Settings and Custom Metadata Types don’t have ObjectPermissions records related to them. Trying to insert or update them will just return an error.

**Dependencies**

- Watch out for permissions dependencies. When updating permission using the Profile edit page for example, Salesforce will automatically enable dependent permissions when needed. When data loading permissions, Salesforce will not automatically update user or system permissions on the profile if you try to update an object permission that has a dependency. Instead the update or insert will fail and you will get an error on that row. Accounts in particular have a large number of dependencies. Example:

```

FIELD_INTEGRITY_EXCEPTION: Permission Convert Leads depends on permission(s): Create Account; Permission Read All Asset depends on permission(s): Read All Account; Permission Read All Contract depends on permission(s): Read All Account; Permission Read All Dsx_Invoice__c depends on permission(s): Read All Account; Permission Read All Orders__c depends on permission(s): Read All Account; Permission Read All OrgChartPlus__ADP_OrgChartEntityCommon__c depends on permission(s): Read All Account; Permission Read All OrgChartPlus__ADP_OrgChart__c depends on permission(s): Read All Account; Permission Read All Partner_Keyword_Mapping__c depends on permission(s): Read All Account; Permission Read All Zuora__CustomerAccount__c depends on permission(s): Read All Account

```

- Additionally, keep in mind what is required at the Object level when setting certain permissions. For example, all levels of access (Edit, Create, etc..) require Read access. Delete access requires Read as well as Edit. Modify All requires all levels of access except Create. Salesforce will not allow you to data load permissions with illegal combinations of CRED access.

**Modify All Data**

- When using SOQL to query object permissions, be aware that some object permissions are enabled because a user permission requires them. The exception to this rule is when “Modify All Data” is enabled on the Profile or Permission Set (*note: not to be confused with the "Modify All" CRED permission*). While it enables all object permissions, it doesn’t physically store any object permission records in the database. As a result, unlike object permissions that are required by a user permission - such as “View All Data” or “Import Leads” - the query still returns permission sets with “Modify All Data,” but the object permission record will contain an invalid ID that begins with “000”. This ID indicates that the profile has full access due to “Modify All Data” and the object permission record can’t be updated or deleted.

- **To remove full access from these objects, disable “Modify All Data” at the Profile level, and then delete the resulting object permission record.**

**Resources**

**Object Permissions:**

[https://developer.salesforce.com/docs/atlas.en-us.api.meta/api/sforce\_api\_objects\_objectpermissions.htm](https://www.google.com/url?q=https%3A%2F%2Fdeveloper.salesforce.com%2Fdocs%2Fatlas.en-us.api.meta%2Fapi%2Fsforce_api_objects_objectpermissions.htm&sa=D&sntz=1&usg=AFQjCNF8UoF2w7bFhUtD-ozf2oCpAXJ9oQ)

**Field Permissions:**

[https://developer.salesforce.com/docs/atlas.en-us.api.meta/api/sforce\_api\_objects\_fieldpermissions.htm](https://www.google.com/url?q=https%3A%2F%2Fdeveloper.salesforce.com%2Fdocs%2Fatlas.en-us.api.meta%2Fapi%2Fsforce_api_objects_fieldpermissions.htm&sa=D&sntz=1&usg=AFQjCNHEVT5t3-Z9ufPlBK54fLCFynSrUg)

# Flow Conventions

Naming and structural conventions related to Flows and the Cloud Flow Engine.

# Flow General Notes

##### **Generalities**

**As of writing this page, August 10th 2023, Flows are primary source of automation on the Salesforce platform.** We left this sentence because the earlier iteration (from 2021) identified that Flows would replace Process Builder and we like being right.

It is very important to note that Flows have almost nothing to do, complexity-wise, with Workflows, Process Builder, or Approval Processes. Where the old tools did a lot of (over)-simplifying for you, Flow exposes a lot of things that you quite simply never had to think about before, such as execution context, DML optimization, batching, variables, variable passing, etc.

So if you are an old-timer upgrading your skills, note that **a basic understanding of programming (batch scripting is more than enough) helps a lot with Flow**.

If you're a newcomer to Salesforce and you're looking to learn Flow, same comment - this is harder than most of the platform (apart from Permissions) to learn and manipulate. This is normal.

##### **Intended Audience**

These conventions are written for all types of Salesforce professionals to read, but the target audience is the administrator of an organization. If you are an ISV, you will have considerations regarding packaging that we do not, and if you are a consultant, you should ideally use whatever the client wants (or the most stringent convention available to you, to guarantee quality).

##### **On Conventions**

As long as we're doing notes: conventions are opinionated, and these are no different. Much like you have different APEX trigger frameworks, you'll find different conventions for Flow. These specific conventions are made to be maintainable at scale, with an ease of modification and upgrade. This means that they by nature include boilerplate that you might find redundant, and specify very strongly elements (to optimize cases where you have hundreds of Flows in an organization). **This does not mean you need to follow everything.** A reader should try to understand *why* the conventions are a specific way, and then decide whether or not this applies to their org.

At the end of the day, as long as you use **any** convention in your organization, we're good. This one, another one, a partial one, doesn't matter. Just structure your flows and elements.

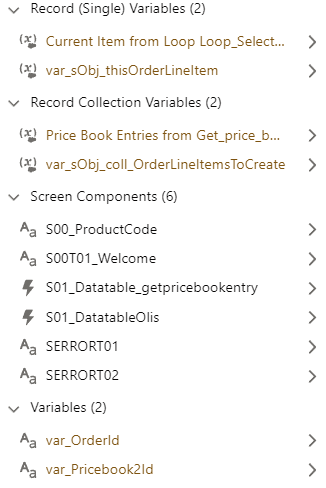

##### **On our Notation**

Finally, regarding the naming of sub-elements in the Flows: we've had conversations in the past about the pseudo-[hungarian notation](https://en.wikipedia.org/wiki/Hungarian_notation) that we recommend using. To clarify: we don't want to use Hungarian notation. We do so because Flow still doesn't split naming schemes between variables, screen elements, or data manipulation elements. This basically forces you to use Hungarian notation so you can have a `var_boolUserAccept` and a `S01_choiceUserAccept` (a variable holding the result of whether a user accepts some conditions, and the presentation in radio buttons of said acceptance), because you can't have two elements just named `UserAccept` even if technically they're different.

##### **On custom code, plugins, and unofficialSF**

On another note: Flow allows you to use custom code to extend its functionality. We define "custom code" by any LWC, APEX Class, and associated that are written by a human and plug into flow. We recommend using as little of these elements as possible, and as many as needed. **This includes UnofficialSF**.

Whether you code stuff yourself, or someone else does it for you, Custom Code always requires audit and maintenance. Deploying UnofficialSF code to your org basically means that you own the maintenance and audit of it, much like if you had developed it yourself. We emit the same reservations as using any piece of code on GitHub - if you don't know what it does **exactly**, you shouldn't be using it. This is because any third-party code is **not part of your MSA with Salesforce, and if it breaks, is a vector of attack, or otherwise negatively impacts your business, you have no official support or recourse.** This is not to say that these things are not great, or value-adding - but you are (probably) an admin of a company CRM, which means your first consideration should be **user data and compliance**, and ease of use coming second.

*Bonus useless knowledge: Flows themselves are just an old technology that Salesforce released in 2010: Visual Process Manager. That itself is actually just a scripting language: “The technology powering the Visual Process Manager is based on technology acquired from Informavores, a call scripting startup Salesforce bought last year.” (2009) Source*

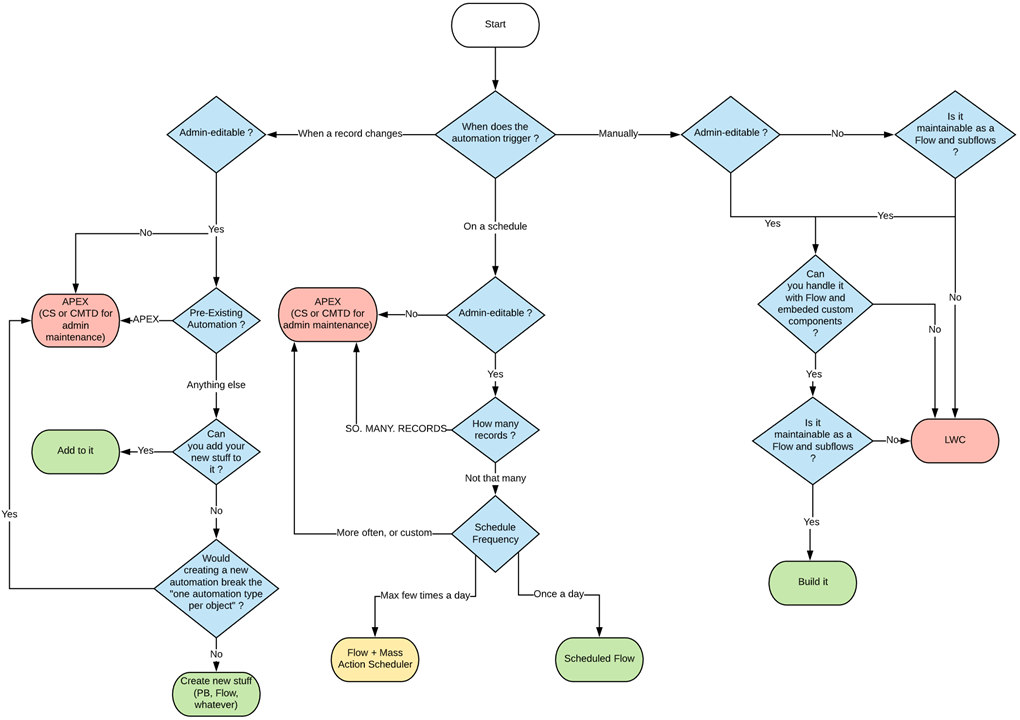

# What Automation do I create Flowchart

[](https://wiki.sfxd.org/uploads/images/gallery/2021-01/image-1610555495687.png)

# Flow Meta Conventions

## Read these Resources first

1. The official [Flows best practice doc](https://help.salesforce.com/articleView?id=flow_prep_bestpractices.htm&type=0). Note we agree on most things. Specifically the need to plan out your Flow first.

2. The [Flows limits doc](https://help.salesforce.com/articleView?id=flow_considerations_limit.htm&type=0). If you don't know the platform limits, how can you build around them?

3. The [Transactions limits doc](https://help.salesforce.com/articleView?id=flow_considerations_limit_transaction.htm&type=0). Same as above, gotta know limits to play around them.

4. [The What Automation Do I Create Flowchart](https://wiki.sfxd.org/books/best-practices/page/what-automation-do-i-create-flowchart). Not everything needs to be a Flow.

5. [The Record-Triggered Automation Guide](https://architect.salesforce.com/decision-guides/trigger-automation), if applicable.

## Best Practices

These are general best practices that do not pertain to individual flows but more to Flows in regards to their usage within an Organization.

#### **On Permissions**

Flows should **ALWAYS** execute in the smallest amount of permissions possible for it to execute a task.

Users should also ideally not have access to Flows they don't require.

Giving Setup access so someone can access `DeveloperName` is bad, and you should be using custom labels to store the ids and reference that instead, just to limit setup access.

**Use System mode sparingly. It is dangerous.** If used in a Communities setting, I REALLY hope you know why you're exposing data publicly over the internet or that you're only committing information with no GETs.

Users can have access granted to specific Flows via their Profiles and Permission Sets, which you should really be using to ensure that normal users can't use the Flow that massively updates the client base for example.

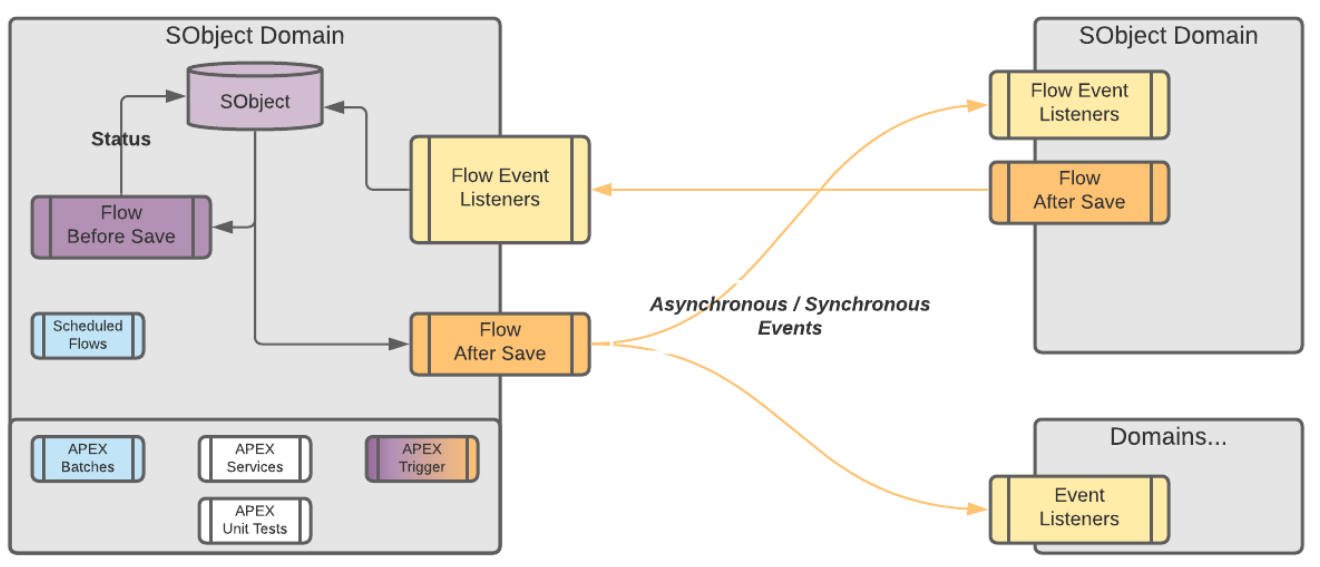

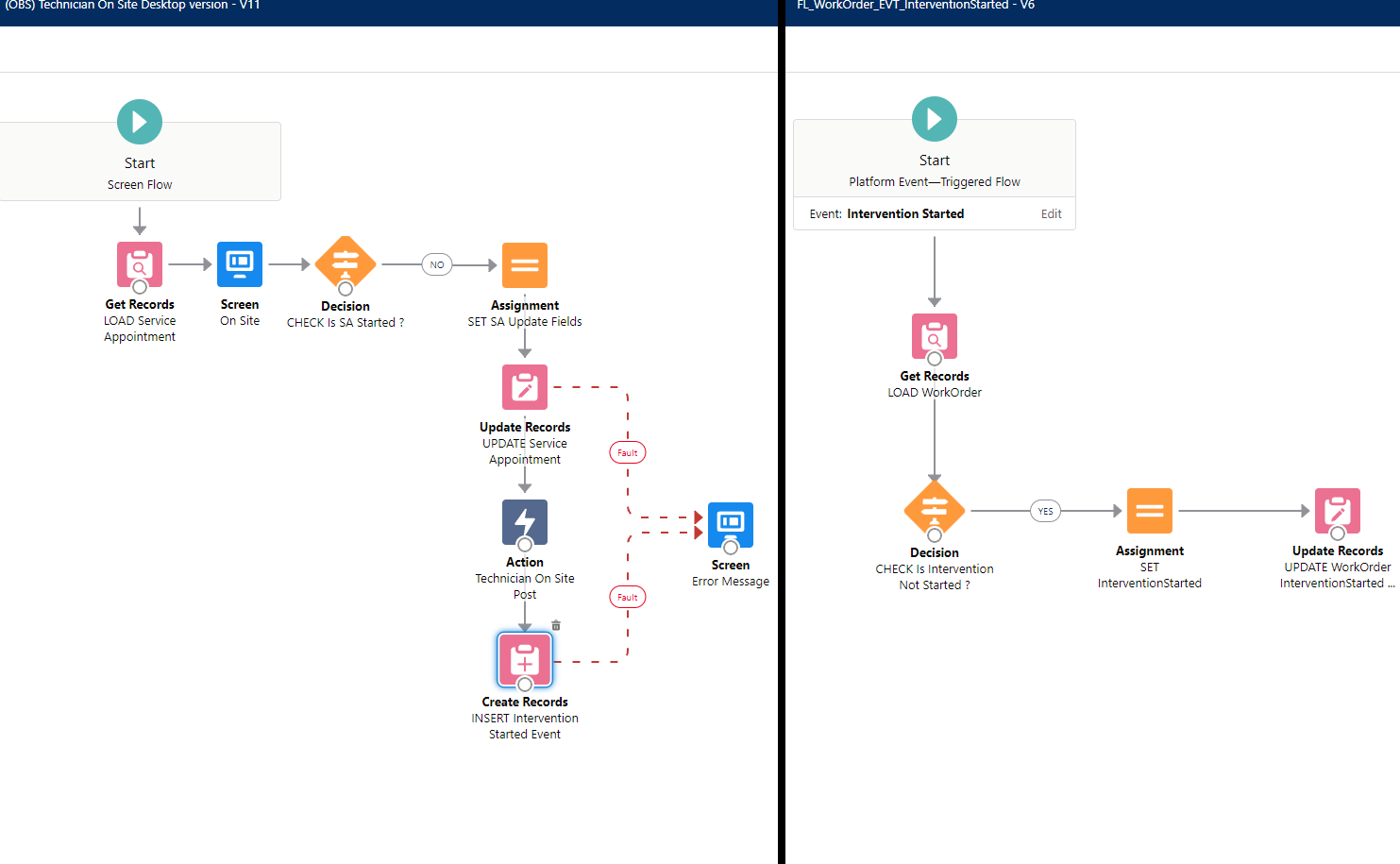

#### **Record-Triggered Flows, and Triggers should ideally not coexist on the same object in the same Context.**

"Context" here means the [APEX Trigger Context](https://developer.salesforce.com/docs/atlas.en-us.apexcode.meta/apexcode/apex_triggers_context_variables.htm). Note that not all of these contexts are exposed in Flow:

**- Screen Flows** execute outside of these contexts, but Update elements do not allow you to carry out operations in the `before` context.

**- Record Triggered Flow** execute either in `before` or `after` contexts, depending on what you chose at the Flow creation screen (they are named "Fast Record Updates" and "Other Objects and Related Actions", respectively, because it seems Salesforce and I disagree that training people on proper understanding of how the platform works is important).

The reason for the "same context" exclusivity is in case of multiple Flows and heavy custom APEX logic: in short, unless you plan *explicitly* for it, the presence of one or the other forces you to audit both in case of additional development, or routine maintenance.

You could technically leverage Flows and APEX perfectly fine together, but if you have a `before` Flow and a `before` Trigger both doing updates to fields, and you accidentally reference a field in both... debugging that is going to be fun.

So if you start relying on APEX Triggers, while this doesn’t mean you have to change all the Flows to APEX logic straight away, it does mean you need to plan for a migration path.

In the case were some automations need to be admin editable but other automations require custom code, you should be migrating the Triggers to APEX, and leveraging sub-flows which get called from your APEX logic.

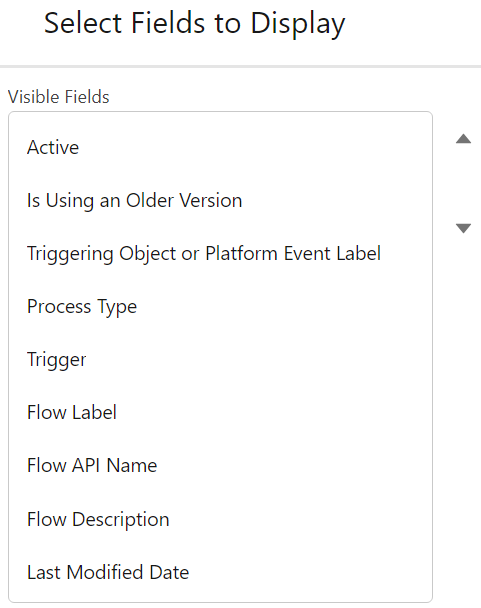

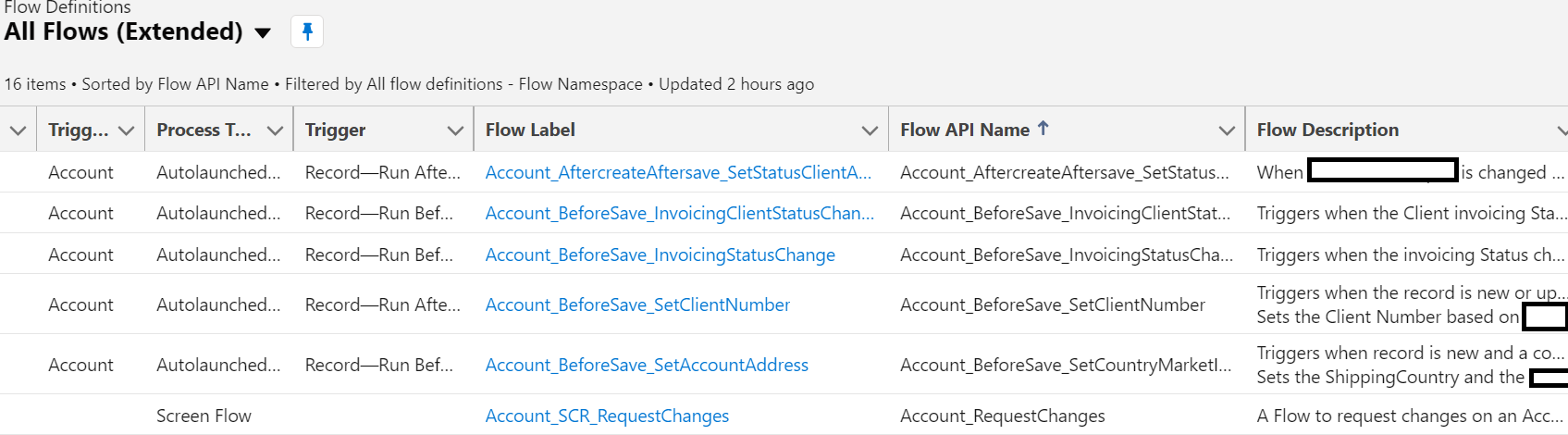

#### **Flow List Views should be used to sort and manage access to your Flows easily**

The default list view is not as useful as others can be.

We generally suggest doing at minimum one list view, and two if you have installed packages that ship Flows:

- - One List View that shows all active flows, with the following fields displayed:

`Active, Is Using an Older Version, Triggering Object or Platform Event Label, Process Type, Trigger, Flow Label, Flow API Name, Flow Description, Last Modified Date`

This will allow you to easily find your flows by Object (apart from Scheduled Flows or Sub-Flows, but this is handled via Naming Conventions), see if you started working on a Flow but didn't activate the last version, and view the beautiful descriptions that you will have set everywhere.

- One List View that shows all Package flows, which contains

`Active, Is Using an Older Version, Flow Namespace, Overridable, Overridden By, Overrides`

This allows you to easily manage your updates to these Flows that are sourced from outside your organization.

#### **Flows are considered Code for maintenance purposes**

Do NOT create or edit Flows in Production, especially a Record-Triggered flow.

If any user does a data load operation and you corrupt swaths of data, you will know the meaning of “getting gray hairs”, unless you have a backup - which I am guessing you will not have if you were doing live edits in production.

No, this isn't a second helping of our note in the [General Notes](https://wiki.sfxd.org/books/best-practices/page/flow-general-notes).

This is about your Flows - the ones you built, the ones you know very well and are proud of.

There are a **swath** of reasons to consider Flows to be Code for maintenance purposes, but in short:

- if you're tired, mess up, or are otherwise wrong, Production updates of Flows can have HUGE repercussions depending on how many users are using the platform, and how impactful your Flow is

- updating Flows in Production will break your deployment lifecycle, and cause problems in CI/CD tools if you use them

- updating Flows in Production means that you have no safe reproducibility environment unless you refresh a sandbox

- unless you know every interaction with other parts of the system, a minor update can have impact due to other automation - whether it be APEX, or other Flows.

In short - it's a short and admin-friendly development, but it's still development.

#### **On which automation to create**

In addition to our (frankly not very beautiful Flowchart), when creating automations, the order of priority should be:

- 1. **Object-bound, BEFORE Flows**

These are the most CPU-efficient Flows to create.

They should be used to set information that is required on Objects that are created or updated.

2. **User-bound Flows**

Meaning Screen flows. These aren’t tied to automation, and so are very CPU efficient and testable.

3. **Object-bound, Scheduled Flows**

If you can, create your flows as a Schedule rather than something that will spend a lot of time waiting for an action - a great example of this are scheduled emails one month after something happens.

Do test your batch before deploying it, though.

4. **Object-bound, AFTER Flows**

These are last because they are CPU intensive, can cause recursion, and generally can have more impact in the org than other sources of automation.

#### **On APEX and LWCs in Flows**

- **APEX or LWCs that are specifically made to be called from Flows should be clearly named and defined in a way that makes their identification and maintenance easier.**

- **Flows that call APEX or LWCs are subject to more limits and potential bugs than fully declarative ones.**

When planning to do one, factor in the maintenance cost of these parts.

Yes, this absolutely includes actions and components from the wonderful UnofficialSF. If you install unpackaged code in your organization, YOU are responsible for maintaining it.

- On a related note, **Don't use non-official components without checking their limits.**

Yes UnofficialSF is great, and it also contains components that are not bulkified or contain bugs.

To reiterate, if you install unpackaged code in your organization, YOU are responsible for maintaining it.

#### **Flow Testing and Flow Tests**

If at all possible, **Flows should be [Tested](https://help.salesforce.com/s/articleView?id=sf.flow_test.htm&type=5)**.This isn't always possible because of [these considerations](https://help.salesforce.com/s/articleView?id=sf.flow_considerations_feature_testing.htm&type=5), (which aren't actually exhaustive - I have personally seen edge cases where Tests fail but actual function runs, because of the way Tests are build, and I have also seen deployment errors linked to Tests). [Trailheads exist to help you get there](https://trailhead.salesforce.com/content/learn/modules/flow-testing-and-distribution/make-sure-your-flow-works).

A Flow Test is **not** just a way to check your Flow works. A proper test should:

- Test the Flow works

- Test the Flow works in other Permission situations

- Test the Flow **doesn't** work in critical situations you want to avoid \[if you're supposed to send one email, you should *probably catch the situation where you're sending 5* mil\]

... and in addition to that, a proper Flow Test will warn you **if things stop working down the line.**

Most of these boilerplates are *negative bets against the future* - we are expecting things to break, people to forget configuration, and updates to be made out of process. Tests are a way to mitigate that.

We currently consider Flow Tests to be "acceptable but still bad", which we expect to change as time goes on, but as it's not a critical feature, we aren't sure when they'll address the current issues with the tool.

Note that proper Flow Testing will probably become a requirement at some point down the line.

#### **On Bypasses**

Flows, like many things in Salesforce, can be configured to respect [Bypasses](https://wiki.sfxd.org/books/best-practices/page/bypasses).

In the case of Flows, you might want to call these "[feature flags](https://developer.salesforce.com/docs/atlas.en-us.packagingGuide.meta/packagingGuide/fma_best_practices.htm)".

This is a GREAT best practice, but is generally overkill unless you are a very mature org with huge amounts of processes.

# Flow Structural Conventions - Common Core

As detailed in the General Notes section, these conventions are heavily opinionated towards maintenance and scaling in large organizations. The conventions contain:

- a "common core" set of structural conventions that apply everywhere (this page!)

- conventions for [Record Triggered Flows](https://wiki.sfxd.org/books/best-practices/page/flow-structural-conventions-record-triggered) specifically

- conventions for [Scheduled Flows](https://wiki.sfxd.org/books/best-practices/page/flow-structural-conventions-scheduled) specificallyDue to their nature of being triggered by the user and outside of a specific record context, Screen Flows do not require specific structural adaptations at the moment that are not part of the common core specifications.

## Common Core Conventions

#### On System-Level Design

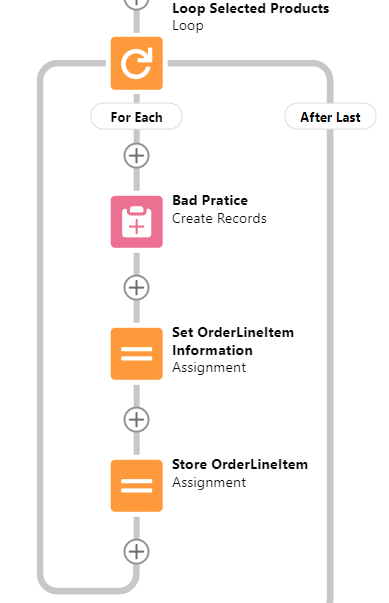

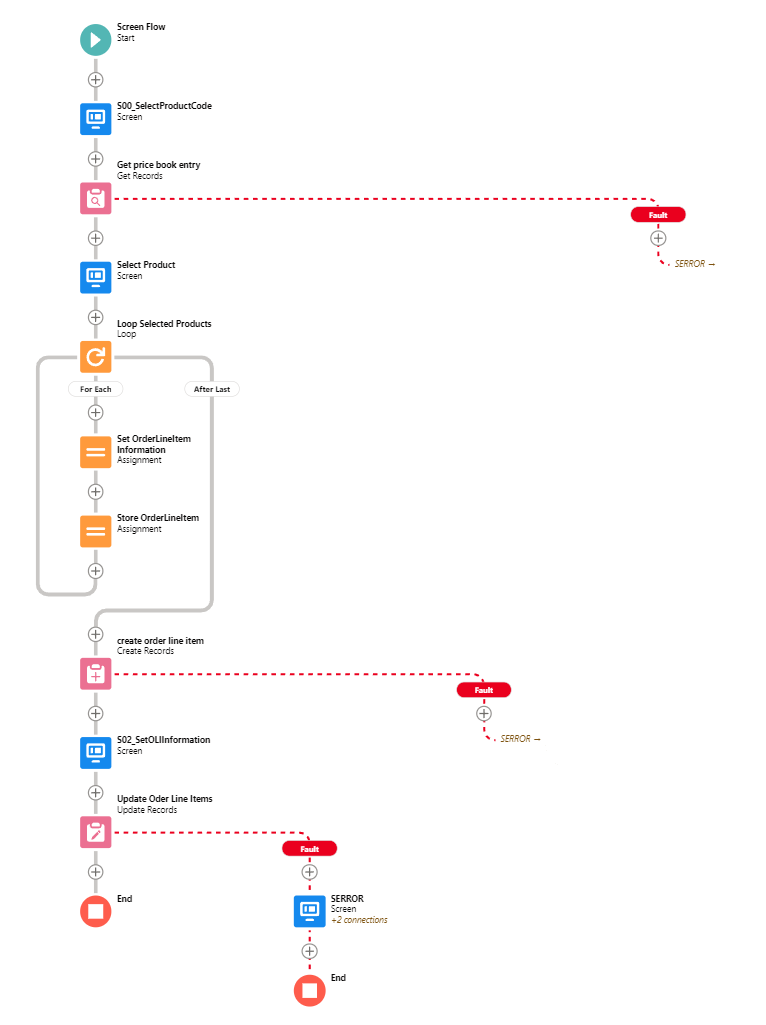

##### **Do not do DMLs or Queries in Loops.**

**Simpler: No pink squares in loops.**

**[DML](https://developer.salesforce.com/docs/atlas.en-us.apexref.meta/apexref/apex_dml_section.htm)** is Data Manipulation Language. Basically it is what tells the database to change stuff. DML Operations include Insert, Update, Upsert, and Delete, which you should know from Data Loader or other such tools.

Salesforce now actually warns you when you're doing this, but it still bears saying.

*Don't do this*

You really **must** not do this because:

- it can break your Flow. Salesforce will still try to optimize your DML operations, but it will often fail due to the changing context of the loop. This will result in you doing one query or update per record in your loop, which will send you straight into [Governor Limit](https://developer.salesforce.com/docs/atlas.en-us.apexcode.meta/apexcode/apex_gov_limits.htm) territory.

- even if it doesn't break your Flow, it will be SLOW AS HELL, due to the overhead of all the operations you're doing

- it's unmaintainable at best, because trying to figure out the interaction between X individual updates and all the possible automations you're triggering on the records you're updating or creating is nigh impossible.

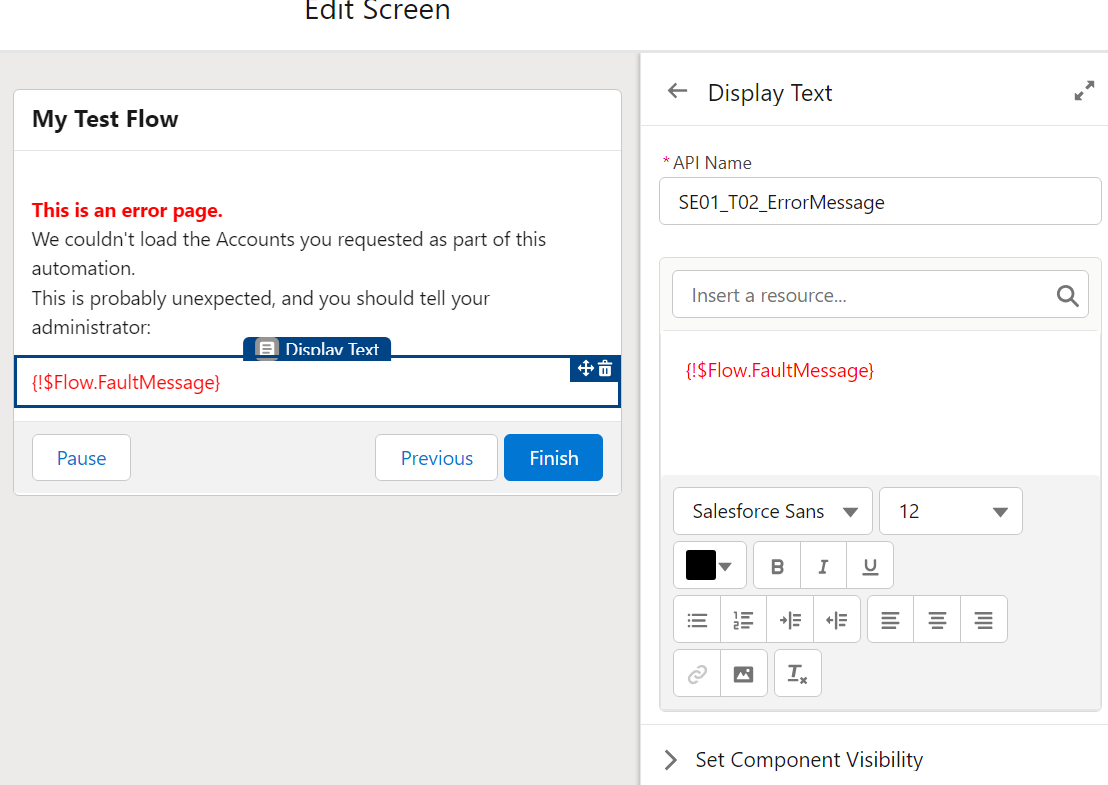

##### **All Pink (DML or Query) elements should have Error handling**

Error, or Fault Paths, are available both in Free Design mode and the Auto-Layout Mode. In Free mode, you need to handle all possible other paths before the Fault path becomes available. In Auto-Layout mode, you can simply select Fault Path.

Screen Flow? Throw a Screen, and display what situation could lead to this. Maybe also send the Admin an email explaining what happened.

Record-triggered Flow? [Throw an email](https://help.salesforce.com/s/articleView?id=sf.flow_ref_elements_actions_sendemail.htm&type=5) to the APEX Email Exception recipients, or emit a [Custom Notification](https://help.salesforce.com/s/articleView?id=sf.notif_builder_custom.htm&language=en_US&type=5).

Hell, better yet throw that logic into a Subflow and call it from wherever.

(Note that if you are in a sandbox with email deliverability set to System Only, regular flow emails and email alerts will not get sent.)

[](https://wiki.sfxd.org/uploads/images/gallery/2022-02/image-1644417882117.png)

Handling Errors this way allows you to:

- not have your users presented with UNEXPECTED EXCEPTION - YOUR ADMIN DID THINGS BADLY

- maybe deflect a few error messages, in case some things can be fixed by the user doing things differently

- have a better understanding of how often Errors happen.

You want to supercharge your error handling? Audit [Nebula Logger](https://github.com/jongpie/NebulaLogger) to see if it can suit your needs. With proper implementation (and knowledge of how to service it, remember that installed code is still code that requires maintenance), Nebula Logger will allow you to centralize **all** logs in your organization, and have proper notification when something happens - whether in Flow, APEX, or whatever.

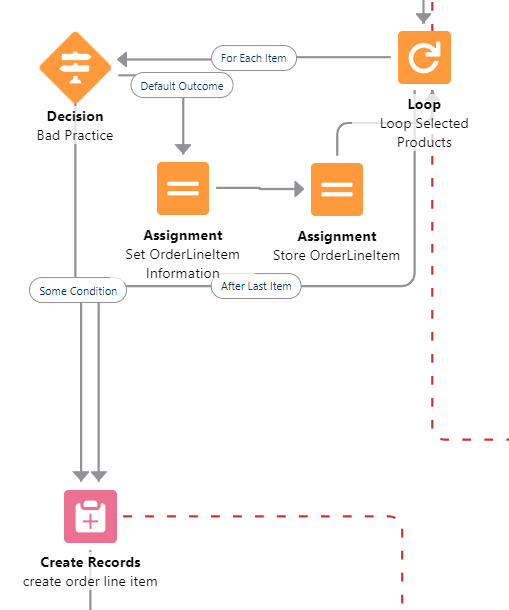

##### **Don't exit loops based on decision checks**

The Flow engine doesn't support that well and you will have weird and confusing issues if you ever go back to the main loop.

*Don’t do this either - always finish the loop*

Issues include variables not being reset, DML errors if you do come back to the loop, and all around general unpredictable situations.

You *can* still do this if you absolutely NEVER come back to the loop, but it's bad design.

##### **Do not design Flows that will have long Wait elements**

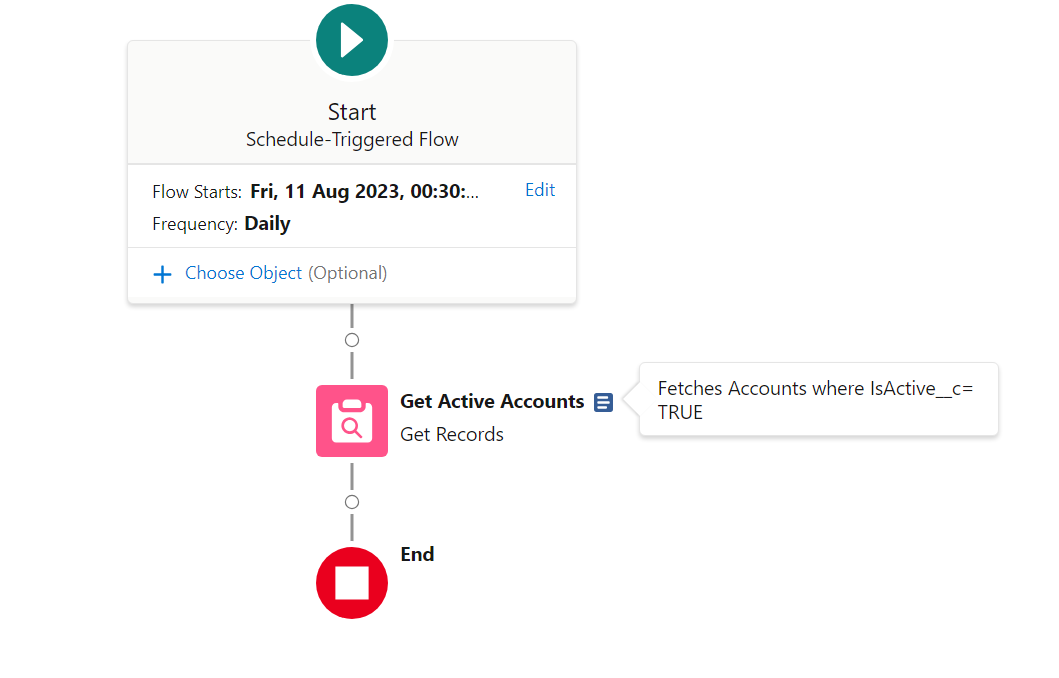

This is often done by Admins coming from Workflow or Process Builder space, where you could just say "do that 1 week before contract end date" or "1 day after Opportunity closure". This design is sadly as outdated as the tools that permitted it.

Doing this will have you exceed your Paused Interview limits, and actions just won't be carried out.

A proper handling of "1 day before/after whenever", in Flow, is often via a Scheduled Flow.

Scheduled Flows execute once daily (or more if you use plugins to allow it), check conditions, and execute based on these conditions. In the above case, you would be creating a Scheduled Flow that :

- Queries all Contract that have an End Date at `TODAY()-7`

- Proceeds to loop over them and do whatever you need it to

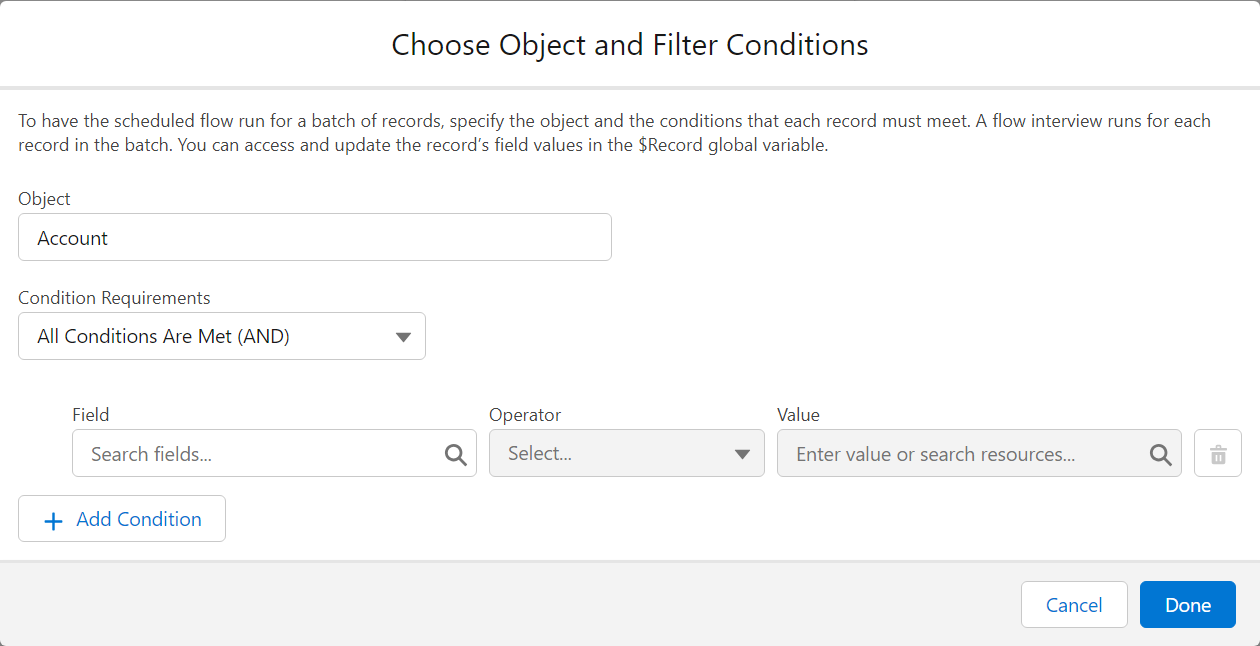

Despite it not being evident in the Salesforce Builder, there is a VERY big difference between the criteria in the Schedule Flow execution start, and an initial GET.

- Putting criteria in the Start Element has less conditions available, but effectively limits the scope of the Flow to only these records, which is great in **big environments**. It also fires **One Flow Interview per Record**, and then bulkifies operations at the end - so doing a **GET** if you put a criteria in the Start element should be done after due consideration only.

- On the opposite, putting no criteria and relying on an initial Get does a single Flow Interview, and so will run less effectively on huge amounts of records, *but* does allow you to handle more complex selection criteria.

##### **Do not Over-Optimize your Flows**

When Admins start becoming great at Flows, everything looks like a Flow.

The issue with that is that sometimes, Admins will start building Flows that shouldn't be built because Users should be using standard features (yes, I know, convincing Users to change habits can be nigh impossible but is sometimes still the right path)... and sometimes, they will keep at building Flows that just should be APEX instead.

If you are starting to hit CPU timeout errors, Flow Element Count errors, huge amounts of slowness... You're probably trying to shove things in Flow that should be something else instead.

APEX has more tools than Flows, as do LWCs. Sometimes, admitting that Development is necessary is not a failure - it's just good design.

#### On Flow-Specific Design

##### **Flows should have one easily identifiable Triggering Element**

This relates to the [Naming Conventions](https://wiki.sfxd.org/books/best-practices/page/flow-naming-conventions).

**Flow Type**

**Triggering Element**

Record-Triggered Flows

It is the Record that triggers the DML

Event-based Flows

It should be a single event, as simple as possible.

Screen Flows

This should be either a single recordId, a single sObject variable, or a single sObject list variable. In all cases, the Flow that is being called should query what it needs by itself, and output whatever is needed in its context.

Subflows

The rule can vary - it can be useful to pass multiple collections to a Subflow in order to avoid recurring queries on the same object. However, passing multiple single-record variables, or single text variables, to a Subflow generally indicates a design that is overly coupled with the main flow and should be more abstracted.

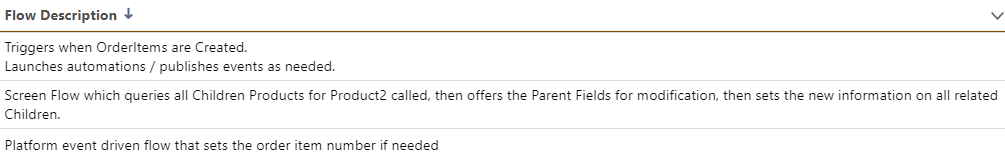

##### **Fill in the descriptions**

You'll thank yourself when you have to maintain it in two years.

Descriptions should not be technical, but functional. A Consultant should be able to read your Flow and know what it does technically. The Descriptions should therefore explain what function the Flow provides within the given Domain (if applicable) of the configuration.

*Descriptions shouldn’t be too technical.*

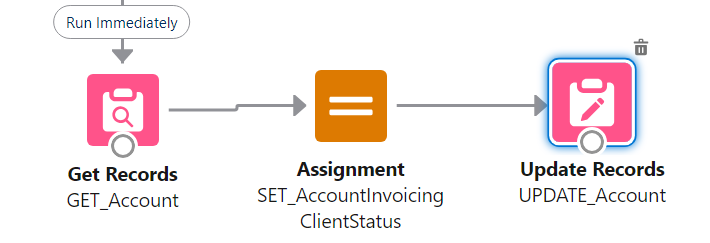

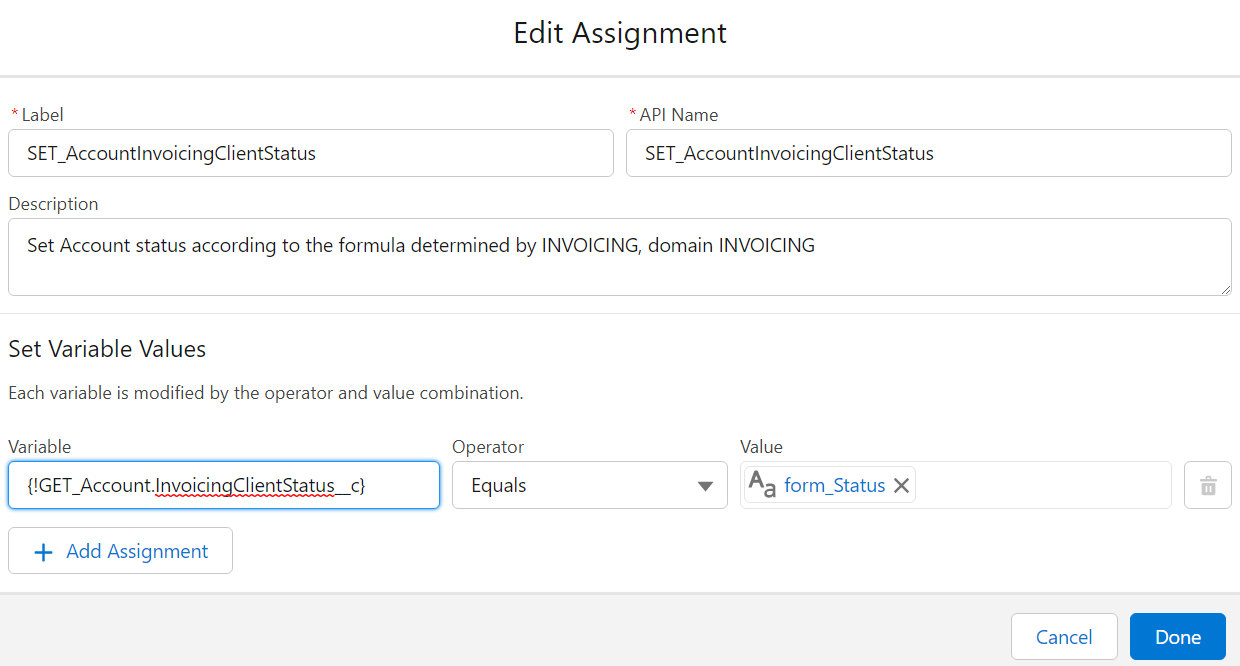

##### **Don't use the "Set Fields manually" part of Update elements**

Yes, it's possible. It's also bad practice. You should always rely on a record variable, which you Assign values to, before using Update with "use the values from a record variable". This is mainly for maintenance purposes (in 99% of cases you can safely ignore pink elements in maintenance to know where something is set), but is also impactful when you do multi-record edits and you *have* to manipulate the record variable and store the resulting manipulation in a record collection variable.

[](https://wiki.sfxd.org/uploads/images/gallery/2023-08/image-1691673305766.png)

[](https://wiki.sfxd.org/uploads/images/gallery/2023-08/image-1691673360337.png)

##### **Try to pass only one Record variable or one Record collection to a Flow or Subflow**

See "Tie each Flow to a Domain".

Initializing a lot of Record variables on run often points to you being able to split that subflow into different functions. Passing Records as the Triggering Element, and *configuration information* as variables is fine within reason.